- 1. Why does WAN optimization exist in the first place?

- 2. How does WAN optimization actually work?

- 3. Does WAN optimization still work on encrypted traffic?

- 4. Where does WAN optimization live in modern architectures?

- 5. What are the benefits of WAN optimization today?

- 6. What are the limitations of WAN optimization?

- 7. How does WAN optimization differ from SD-WAN and application acceleration?

- 8. WAN optimizations FAQs

- Why does WAN optimization exist in the first place?

- How does WAN optimization actually work?

- Does WAN optimization still work on encrypted traffic?

- Where does WAN optimization live in modern architectures?

- What are the benefits of WAN optimization today?

- What are the limitations of WAN optimization?

- How does WAN optimization differ from SD-WAN and application acceleration?

- WAN optimizations FAQs

What Is WAN Optimization? [+ How It Works & Modern Role]

- Why does WAN optimization exist in the first place?

- How does WAN optimization actually work?

- Does WAN optimization still work on encrypted traffic?

- Where does WAN optimization live in modern architectures?

- What are the benefits of WAN optimization today?

- What are the limitations of WAN optimization?

- How does WAN optimization differ from SD-WAN and application acceleration?

- WAN optimizations FAQs

WAN optimization is the process of improving how efficiently data travels across wide area networks by reducing latency, loss, and congestion.

It uses techniques such as compression, traffic shaping, and error correction to make network paths behave more predictably.

Today, these functions are built into SD-WAN and SASE data planes, where they enhance transport performance for cloud, SaaS, and hybrid environments.

Why does WAN optimization exist in the first place?

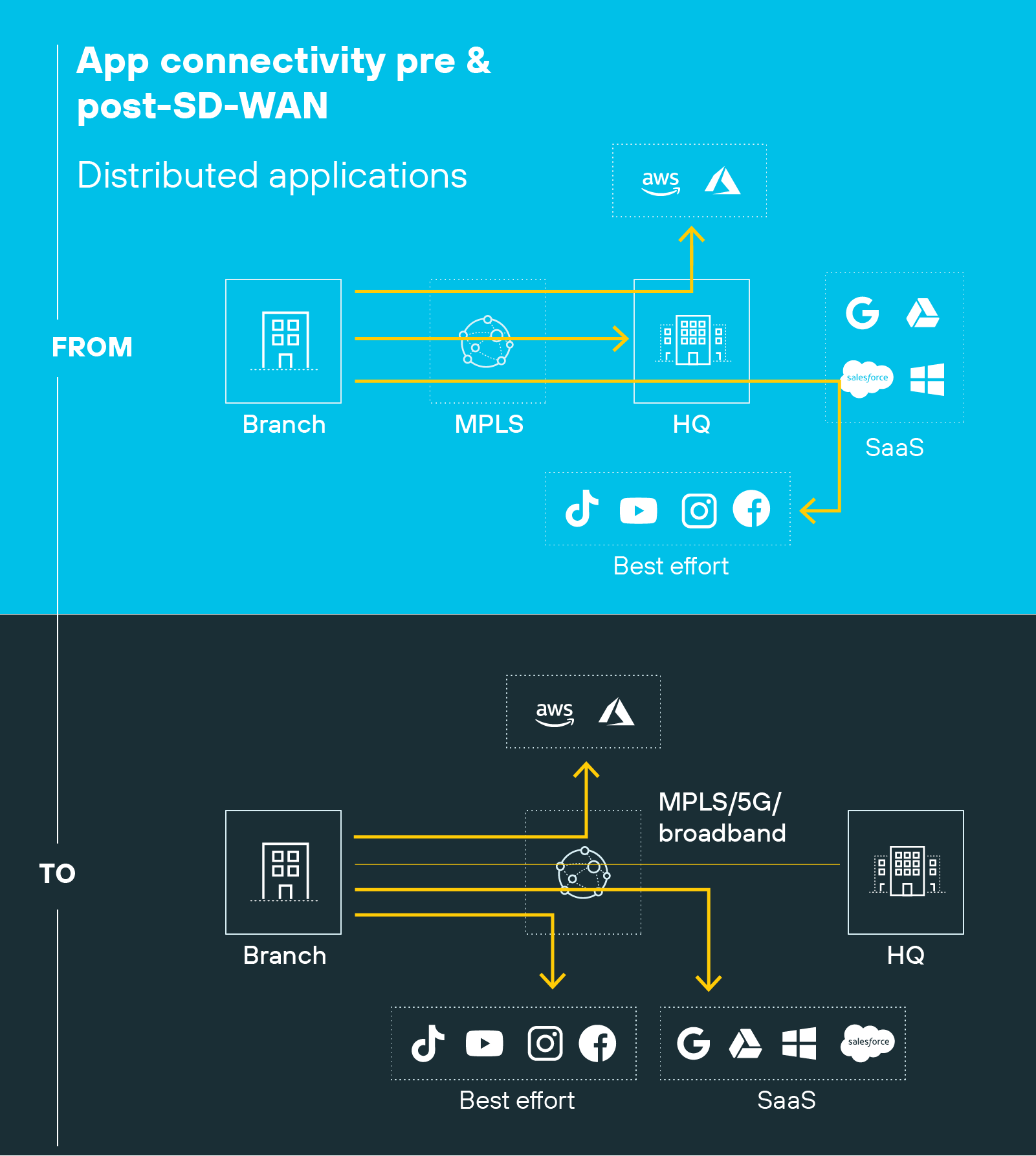

Wide area networks weren't designed for the kind of traffic they carry today.

In the early days, data had to move between fixed sites—data centers, branch offices, and remote users.

That distance introduced delay. Each transaction required multiple acknowledgments between sender and receiver, a behavior built into TCP. And those "chatty" sessions meant high latency, slow applications, and wasted bandwidth over long links.

The performance issues weren't just about distance. They were built into the protocol design itself.

To overcome that, network infrastructure vendors introduced WAN acceleration appliances. These devices intercepted TCP sessions and acted as local proxies. They reduced redundant data transfers through caching and compression. And they also altered TCP behavior to keep more data in flight, a process known as TCP spoofing.

For a time, that approach worked. Especially in private MPLS networks where most enterprise traffic stayed within the corporate perimeter.

But then the network changed. Applications moved to the cloud. Users connected from anywhere. Traffic patterns shifted from site-to-site to site-to-cloud and cloud-to-cloud.

The traditional WAN box could no longer see—or optimize—most of that traffic.

Which meant that WAN optimization had to evolve.

And that's why today it's no longer a standalone appliance. It's a set of capabilities embedded into SD-WAN and SASE data planes. Its role is to make transport more efficient across hybrid networks that span private links, broadband, and the public internet.

How does WAN optimization actually work?

The problems that drove early WAN acceleration still exist. But the way optimization works has changed dramatically.

Essentially, WAN optimization works by improving how data flows across long-distance network paths.

It reduces latency, minimizes packet loss, and ensures that traffic moves as efficiently as possible between endpoints. The techniques used fall into several categories that together make the network behave more predictably and with higher throughput.

More specifically:

Data reduction comes first.

Traditional WAN optimization appliances compressed data, removed redundant information, and cached files that users frequently requested. This reduced the amount of data that needed to travel over expensive or limited WAN links. However, most of those methods depend on seeing the contents of the traffic.

Modern encryption protocols such as TLS 1.3 and QUIC make payload visibility limited, which means compression and deduplication now provide smaller gains. Caching still helps in a few predictable cases, but most optimization today happens at the transport level rather than the content level.

Transport optimization focuses on how data packets move.

Early systems tuned TCP behavior to allow more data to stay in transit before waiting for an acknowledgment. Later, extensions like window scaling and time-based loss detection standardized these improvements across devices.

Modern networks increasingly use QUIC, which builds similar congestion control and recovery logic directly into the protocol. These refinements reduce round-trip delays and prevent unnecessary retransmissions.

Loss mitigation addresses what happens when packets are dropped.

Instead of waiting for retransmissions, many systems use forward error correction. Extra bits of information are sent with the data so the receiver can rebuild missing packets without resending them. This improves throughput on unstable links such as broadband or cellular paths.

Some implementations also re-order packets on arrival to smooth out jitter introduced by varying path quality.

Traffic management ensures that the most important traffic gets priority.

Quality of service policies classify packets and allocate bandwidth based on real-time needs. Mechanisms defined in standards like DiffServ mark and schedule packets to meet latency and loss targets.

Modern SD-WAN software extends these principles through adaptive path selection. When a link shows high delay or loss, the system automatically shifts flows to a better path.

Visibility and telemetry tie all these pieces together.

Continuous monitoring collects data on delay, jitter, and packet loss across every tunnel. That feedback loop allows the network to adjust optimization behavior dynamically. It's the difference between static configuration and real-time control.

Important: All of these functions operate at the link or data-plane layer, not the control plane. They act directly on how packets move, not on how routes are chosen.

In modern architectures, WAN optimization has become an embedded capability that quietly maintains performance across hybrid and cloud-based networks.

Does WAN optimization still work on encrypted traffic?

Yes, WAN optimization still works on encrypted traffic. But differently than it used to.

Encryption introduced a new challenge for WAN optimization. Older techniques relied on inspecting data in transit. But now, most of that traffic is protected by protocols that hide its contents end to end.

Here's why.

Modern encryption standards—TLS 1.3, IPsec, and QUIC—secure nearly every session across the internet. They encrypt both the payload and most of the transport headers. And that prevents intermediaries from seeing or modifying the data as it moves.

As a result, the classic optimization methods like compression and deduplication no longer apply in most cases. Those techniques depend on visibility into repeated patterns within the payload, which encrypted data intentionally conceals.

So what still works?

Plenty.

Optimization now focuses on what remains observable without breaking encryption. Basic network characteristics such as round-trip time, packet loss, and jitter are still visible in header metadata. Those measurements allow systems to adapt traffic flow even when content is hidden.

Forward error correction and pacing can also be applied below the encryption layer to prevent packet loss and smooth delivery. And quality of service policies still shape and prioritize traffic because they operate on packet behavior, not payload content.

There are also new ideas on the horizon.

Researchers are exploring "sidecar" models that improve transport performance without decrypting traffic. In these designs, lightweight companion protocols exchange performance feedback separately from the encrypted flow. This approach maintains privacy while still allowing in-path systems to react to congestion or loss in real time.

In short: WAN optimization continues to work on encrypted networks. Just differently. It's evolving away from deep packet manipulation toward intelligent, in-path enhancement that respects encryption boundaries while keeping data moving efficiently.

Where does WAN optimization live in modern architectures?

WAN optimization no longer lives in a single device or location. It's become a distributed capability built into modern network architectures.

Instead of a standalone appliance sitting between data centers, it now operates inside the same software-defined infrastructure that manages connectivity across clouds, users, and branches.

Here's how that shift happened.

Early WAN optimization hardware was deployed at fixed points. Often one box at the data center and another at each branch. Those systems accelerated specific links but struggled once traffic began flowing directly to cloud applications.

Architectural models for modern SD-WAN replaced those fixed appliances with software functions that run where the data actually moves. Now, optimization takes place within SD-WAN edges, cloud on-ramps, and secure access service edge (SASE) points of presence distributed across regions.

Important: The control and data planes are separate. Controllers handle orchestration, pushing policies, defining path preferences, and managing identity. The data plane carries out those policies in real time.

WAN optimization operates in that data plane. It enhances transport efficiency within the encrypted tunnels that connect users, sites, and clouds, while the controller only dictates how and when those optimizations apply.

Cloud providers also use their own middle-mile optimization. Services like global accelerators and virtual WAN fabrics route traffic through high-performance backbones with consistent latency and throughput.

These capabilities mirror the principles of WAN optimization but on a global scale, reducing the variability of internet routing and improving access to SaaS and cloud workloads.

Modern implementations take that even further.

In distributed architectures, secure connectivity is maintained through continuous key rotation and independent data-plane rekeying. That allows large-scale meshes of branch, cloud, and user connections to stay both secure and performant.

Ultimately, WAN optimization now lives everywhere traffic flows: embedded into the software, protocols, and fabrics that define today's enterprise networks.

- SD-WAN vs. SASE: Where One Ends and the Other Begins

- SD-WAN vs. SASE vs. SSE: What Are the Differences?

What are the benefits of WAN optimization today?

WAN optimization continues to deliver measurable network performance improvements. But for different reasons than it once did.

It no longer focuses on bandwidth compression. Today, its value comes from improving how traffic behaves across long or unpredictable network paths.

Here's what that looks like in practice:

-

Fewer retransmissions and less packet loss.

Modern WAN optimization minimizes packet loss through techniques such as forward error correction and adaptive pacing. By reducing retransmit events, it shortens completion times for data flows and keeps sessions stable even when links experience jitter or brief interruptions.

-

Lower latency and faster response times.

Smarter congestion control and dynamic path selection reduce round-trip delay. When delay or congestion appears on one link, traffic can move to a faster or cleaner path. That optimization directly improves application responsiveness. Especially for SaaS, cloud, and collaboration traffic.

-

Higher and more consistent throughput.

By maintaining more data in flight and recovering from loss without waiting for retransmits, optimization raises effective throughput on high-latency or lossy links. That consistency keeps utilization closer to link capacity and supports large data transfers more efficiently.

-

More predictable performance for modern workloads.

SaaS, video, and real-time collaboration depend on low jitter and stable delivery. WAN optimization smooths packet timing and order, producing a steadier user experience across hybrid and cloud networks.

In short:

Modern WAN optimization improves loss recovery, latency, throughput, and predictability. It's no longer about sending less data. It's about keeping data moving smoothly and consistently across every available path.

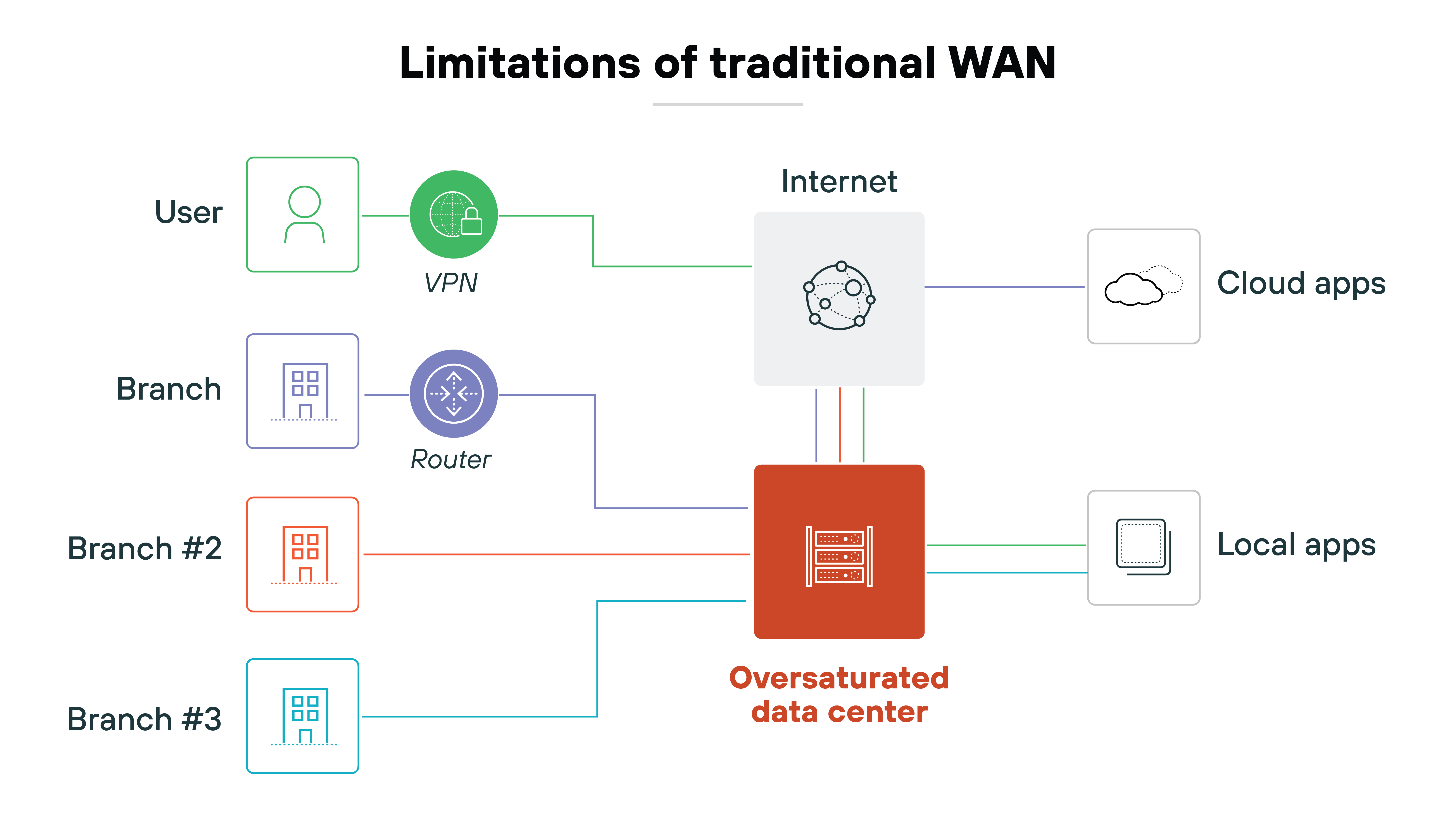

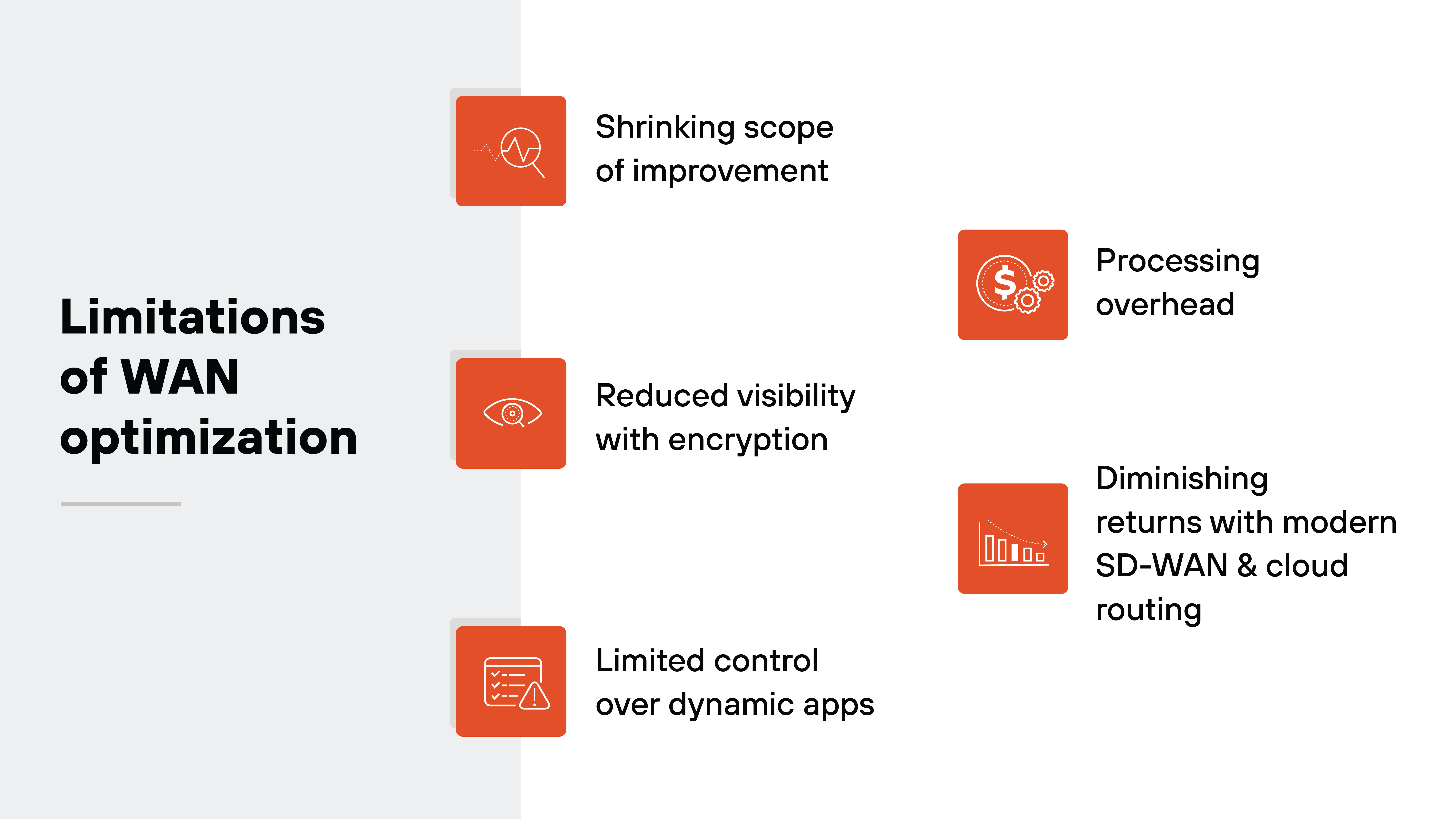

What are the limitations of WAN optimization?

WAN optimization still has clear value. But it also has limits. Those limits don't make it obsolete. They simply define where its impact begins to taper off.

Let's take a closer look at where those limitations appear in practice:

-

The scope of improvement is shrinking.

Modern traffic is already optimized at the protocol level. Technologies such as HTTP/3 and QUIC manage congestion, retransmission, and multiplexing natively. That leaves less room for external optimization tools to add measurable gains.

-

Encryption reduces visibility.

Most web and application traffic is encrypted end to end. While that protects privacy, it prevents payload inspection and disables compression or deduplication techniques that rely on repeated data patterns. As a result, optimization now focuses on transport behaviors rather than content-level processing.

-

Dynamic applications limit control.

SaaS and API-driven workloads often use ephemeral connections that the WAN cannot directly monitor or prioritize. Without control of both endpoints, optimization cannot consistently apply or measure its policies across all traffic types.

-

Software overhead adds complexity.

Software-based optimization consumes CPU and memory resources, particularly when combined with inline security inspection and routing. Performance can also vary by deployment model—hardware, software, or cloud-native—depending on available resources.

-

Diminishing returns are inevitable.

As SD-WAN, cloud routing, and middle-mile services improve path selection and congestion management, the incremental benefits of traditional optimization narrow. It remains valuable, but now as one element of a broader, software-defined performance layer.

In short: WAN optimization delivers the most value where it can still influence transport efficiency. Its limits reflect how far protocols and architectures have evolved. Not a loss of relevance, but a natural boundary for a mature technology.

How does WAN optimization differ from SD-WAN and application acceleration?

WAN optimization, SD-WAN, and application acceleration often appear together. But they address different parts of the performance problem.

They're related, but not interchangeable.

Here's how they differ.

-

WAN optimization improves performance at the data-plane level.

It makes the network path itself more efficient by reducing latency, packet loss, and jitter. The goal is to maximize throughput and maintain consistent transport behavior across variable links.

-

SD-WAN operates above that layer.

It manages how traffic is routed between sites, clouds, and users. Controllers apply policy and analytics to decide which path each flow should take based on metrics like latency, bandwidth, or cost.

In other words: SD-WAN orchestrates the path; WAN optimization enhances how data moves along it.

-

Application acceleration works higher still—at the application layer.

It focuses on how individual applications behave across the network. That can include protocol-specific optimizations, caching, or QUIC-based multiplexing to improve responsiveness for SaaS and cloud workloads.

| Comparison: WAN optimization vs. application acceleration vs. SD-WAN |

|---|

| Category | WAN optimization | SD-WAN | Application acceleration |

|---|---|---|---|

| Primary function | Improves data-plane performance and transport efficiency | Orchestrates traffic paths and enforces routing policies | Enhances application responsiveness and protocol behavior |

| Layer and scope | Operates at the data plane with packet- and flow-level visibility | Runs in the control plane with network-wide visibility | Functions at the application layer with session-level context |

| Core focus | Reduces latency, packet loss, and jitter | Selects optimal network paths based on policy and performance | Reduces application-layer latency and improves user experience |

| Key techniques | Forward error correction, pacing, congestion control, QoS | Dynamic path selection, traffic steering, centralized control | Protocol tuning (e.g., QUIC), caching, content optimization |

| Deployment model | Embedded in SD-WAN/SASE data planes or as software modules | Centralized controller with distributed edge devices | Integrated into SD-WAN/SASE edges or app delivery stacks |

| Outcome | Higher throughput and stable transport | Efficient use of WAN links and centralized management | Faster, more consistent application performance |

| Relationship to others | Enhances performance on SD-WAN–selected paths | Provides telemetry for optimization and acceleration | Uses telemetry from SD-WAN and WAN optimization for adaptive tuning |

Together, they form a layered system of performance control. SD-WAN establishes the best route for each session. WAN optimization makes that route perform smoothly and predictably.

Application acceleration ensures the user experience stays responsive, even for distributed or real-time applications.

In integrated architectures like SASE, these functions complement one another. Policy is managed centrally, while optimization and acceleration occur locally at the data plane or application edge.

Basically, SD-WAN chooses the best path. WAN optimization makes that path behave better.

- What Is Application Acceleration? [+ How It Works & Examples]

- What Is SD-WAN Architecture? Components, Types, & Impacts